Question level analysis in science

Question level analysis has become popular recently when teachers review assessments. In some ways it represents the magic bullet. Find out what topics students did badly on and then focus revision and re-teaching specifically on those areas. I was so convinced it would work that I spent many hours inputting marks for individual questions into excel to generate personalised question level analyses. Sadly, I’m not sure this was time well spent as I didn’t think enough about the data I was collecting.

What’s the problem with question level analysis by topic in science?

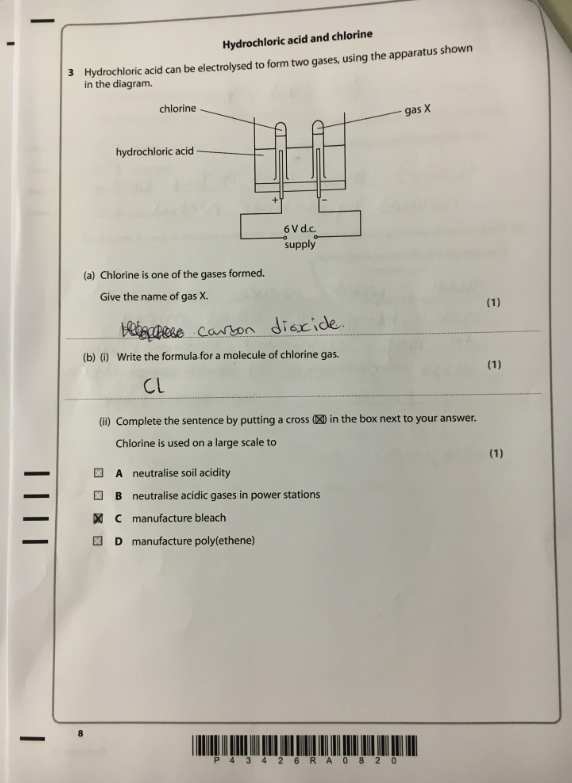

Take this exam question, the topic is electrolysis. This student scored 1/3. Analysis of the paper by topic would suggest that this student needs to go away and learn electrolysis. But do they? If we look more closely at the individual elements of this question we can see that there are actually some other, much more fundamental aspects of chemistry that this student does not understand.

Take this exam question, the topic is electrolysis. This student scored 1/3. Analysis of the paper by topic would suggest that this student needs to go away and learn electrolysis. But do they? If we look more closely at the individual elements of this question we can see that there are actually some other, much more fundamental aspects of chemistry that this student does not understand.

Let’s look at 3(a). The student gets this answer wrong. But more interesting than that, the student states it was carbon dioxide and therefore demonstrates a more fundamental misunderstanding, they don’t know that elements are always conserved in chemical reactions. The only possible substances that could be formed from HCl are hydrogen or chlorine. If they did understand this concept of conservation, then carbon dioxide could never have been an option. It is possible they think carbon dioxide contains H or Cl atoms but whatever the ‘gap’, I don’t think it will be addressed with revision of electrolysis.

Now let’s look at 3(b). The student states that the formula of a molecule of chlorine is Cl instead of Cl2. The student clearly does not understand the concept of diatomic molecules. Simply reviewing the paper in class and getting students to make corrections will only bring about progress if that exact question appears again. A much more effective approach would be to review diatomic molecules and covalent bonding as this is not a misunderstanding of electrolysis per se.

What should we do instead?

Recording student marks on specific topics is a very blunt tool indeed to perform meaningful question level analysis. So what are we to do with the data without huge investments in technology? The first question you must ask yourself is whether there is merit in analysing a summative assessment in the first place. Summative papers often only assess a tiny fraction of the course and so spending time on questions, that may never reappear, could be time wasted. Reviewing formative, diagnostic tests, that assess discrete aspects individually, could be a much more powerful approach.

If you are convinced that lessons can be learned from reviewing summative assessments, then I think it’s all about using the expert – the teacher who marks the paper. Teachers must keep a note of common errors when they mark papers; a good tip is to annotate a blank copy of the assessment as you mark. Then, rather than just go through the paper in class, tasks should be designed to re-teach concepts and address misconceptions. In this case, students could be asked to predict the possible gases that could and could not be formed from a number of formulae (e.g. H2O, HBr, CaCO3). If students are successful in completing this exercise then real progress has been made, and this new knowledge and understanding will have benefits well beyond questions on electrolysis.

Further reading

A nice summary of how to do QLA well from Dr Austin Booth.

- Summative assessments in science

- Assessment design – 10 principles

- Standarisation and marking accurately

- Question level analysis

- Planning for re-teach

- Peer assessment in science

- Written feedback in science

- Assessing scientific skills in science