Assessment design

Driving the right behaviours: 10 principles of assessment design

Whether we like it or not, assessment drives behaviours. And this is true for both schools and life. Now that’s not a problem, as long as the assessment drives the ‘right’ behaviours.

Here we’re looking at how to design interim assessments for subjects assessed using a difficulty model i.e. where questions get progressively harder.

Interim assessments are the hybrids of the assessment world; neither truly summative nor properly formative. Often referred to as termly assessments, these tests provide information on how students perform relative to their peers, whilst providing some opportunity for feedback and re-teaching.

I hope the ten principles below will be useful to anyone building assessments and can help drive the right behaviours in school – that is an assessment that is in service to a great curriculum and leads to informed actions.

1. Assess the most important knowledge you want students to know

What we care about is not ‘measuring students’ what we care about it measuring whether students have understood the right things in a specific subject. This means we must identify the most important knowledge that is essential for good progression and design questions to assess it.

2. Have a clear model of progression for both substantive and disciplinary knowledge

It’s not just the substantive knowledge (facts of the subject) that we want to assess. We also need to assess the disciplinary knowledge – this is the knowledge about how a subject generates new knowledge. In science this is the knowledge required to work scientifically, mathematically and practically. And just like principle one, this assessment can only happen if the curriculum has first defined the progression of both types of knowledge over time.

3. Make the assessment cumulative

Because we learn new things by relating them to what we already know, it’s important that previous knowledge is not forgotten and disposed of. This means we need to create assessments that assess concepts that have been learnt in previous terms/years. As a general rule, 60% of marks assessing this term’s work and 40% assessing previous terms/years works well. This balance, between assessing old and new work, ensures the assessment feels relevant to students and teachers by providing feedback on recent work, but still encourages ideas met earlier in the course to be revisited.

4. Sample across the domain and keep it blind

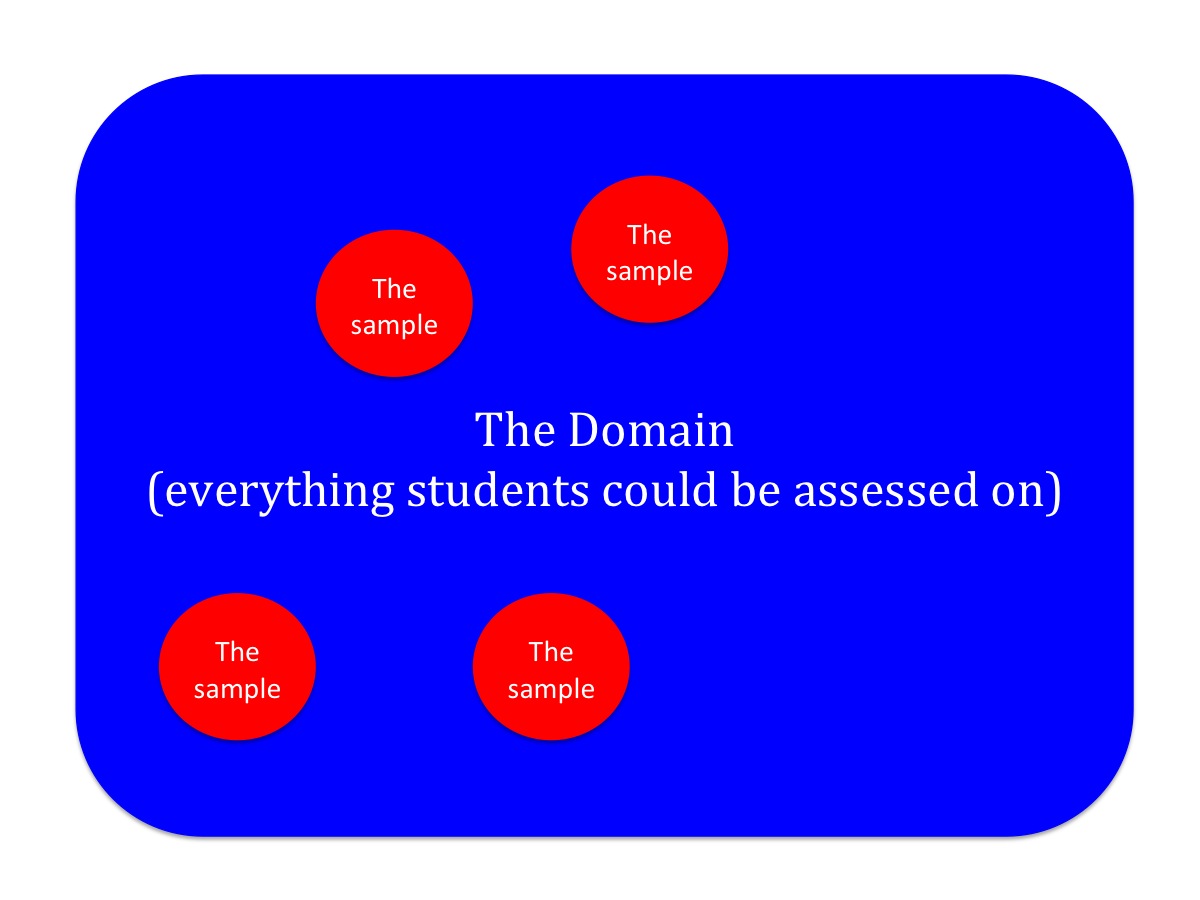

Many subjects require students to learn more knowledge than can ever be assessed in its entirety – there’s just not enough time.

So, just as a biologist will use a few quadrats to estimate the population of daises in an entire field, we will need to estimate student attainment by assessing only a subset of what has been taught. This sampling can though have unintended consequences, by encouraging teaching to the test. We can address this problem by sampling across the domain in an unpredictable way. In subjects such as science and geography we can achieve this unpredictability by making assessments blind each time i.e. not seen by teachers or students beforehand. If you’re writing your own tests you can still achieve this ‘blindness’ by writing a few assessments that collectively cover the entire domain. You then randomly select the assessment to use and repeat this process each year thereafter.

5. Dig holes, don’t cut grass – assess conceptual understanding

This principle is about making sure that the assessment asks the questions that we really care about – how well do students understand specific concepts that are being learnt? To get at understanding we must design questions that probe students’ schemas (we need to dig holes). Too many surface level questions (cutting grass) that don’t get at the deep structures will encourage surface learning and lead to bunching of marks. For example, asking students to arrange the following in order of size: atom, cell, particle, nucleus will give you a richer insight into their understanding of scale than simply asking students to define the terms. So, probing questions don’t have to be extended responses, MCQs and short answers are fine, they just need to asses whether students understand the relationships between all this knowledge and so report effectively on understanding and not mimicry.

6. Be clear on what you are and are not assessing

Of course we want to challenge students within an assessment but we must do this with intentionality. As soon as we introduce a context we are increasing the size of the domain we are assessing. For example, we could ask students to draw a diagram to show the arrangement of particles in a gas. Or, we could ask them to draw the arrangement of particles inside Sally’s helium balloon she was given at a party. Although the answer would look the same we can’t be sure about the inference of a wrong answer. Does the student not know what a balloon is? Or is the problem helium, Sally or the particle model? The key point here is to check you are assessing what you think you are and be cautious of what comprises everyday, assumed knowledge when you include it in a question.

7. Mark schemes should inform how questions are designed

Create the mark scheme at the same time as you write the question. It’s only when you write each marking point that you understand whether the question is actually a good one i.e. is assessing what you want it to (see point 6) – and even then you will get surprises when students sit the exam. Mark schemes can’t capture every possible phrasing allowed – they must though clearly define the key aspect/knowledge/concept that is being assessed and identify the answers that should be rejected, ignored and carried forward.

8. Make the assessment appropriately demanding

Having foundation and higher tier paper can increase the ability of an assessment to distinguish candidates – i.e. increase precision and prevent students from having to endure inappropriate questions. Un-tiered assessments provide a common currency to compare students and importantly, don’t require teachers to make predictions of student performance before an exam. They do though carry the risk that there aren’t enough questions to distinguish students, resulting in narrow grade boundaries. My preference in science is to leave tiering decisions until Yr10 and give all students the same opportunity – this does require that you construct un-tiered papers that are appropriate for all. As a general rule, assessments with 30% low demand questions (easy for that age-group) 40% standard demand questions (OK for that age-group) and 30 % high demand questions (hard for that age-group) seem to work. Ramping in difficulty both within a question and throughout a paper will provide opportunities for most to keep going and not give up. And the assessments need to be long enough but not too long – about 90 marks, sat in a total time of 1h30 should do it.

9. Assessments should balance workload with effectiveness

When creating any assessment we must balance the need for quality assessment information with the need for making workload sustainable. Multiple choice questions and comparative judgement can help here. And think carefully about the frequency of these tests: two interim assessments per year seems a balanced approach for non-examination groups, allowing more time for teaching and more time to respond to the assessment information when it comes.

10. Provide opportunities for teachers to practice applying the mark scheme

It sounds an obvious point, but any assessment is only as reliable as the quality of marking that sits behind it. Marking accurately requires real expertise, so it’s definitely worth spending time creating standardisation material that teachers can use to develop their practice. I’ve written about standardisation in another post but perhaps the most practical way to do this is to create a dummy script i.e. one that has pretend student answers, and use this as a stimulus for a discussion on how to apply the mark scheme. Not only does this improve marking accuracy, it also supports teachers to develop their understanding of what great student work looks like.